ffmpeg -hwaccel cuda -hwaccel_output_format cuda -i input.mp4 -c:v h264_nvenc -b:v 5M output.mp4 -hwaccel cuda Maximizing the transcoding speed also means making sure that the decoded image is kept in GPU memory so the encoder can efficiently access it. Using the hardware encoder NVENC and decoder NVDEC requires adding some more parameters to tell ffmpeg which encoder and decoders to use.

#Ffmpeg resize frame software#

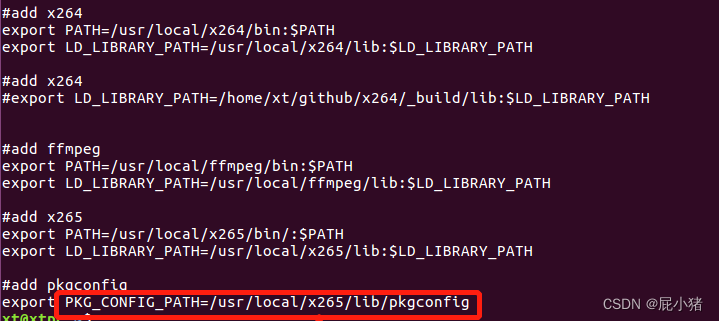

Selects the software H.264 encoder for the output streamīut this is going to be slow slow since it only uses the CPU-based software encoder and decoder. Using FFmpeg to do software 1:1 transcode is simple: ffmpeg -i input.mp4 -c:a copy -c:v h264 -b:v 5M output.mp4 -c:a copyĬopies the audio stream without any re-encoding Build with multiple processes to increase build speed and suppress excessive output: make -j -s.configure -enable-cuda -enable-cuvid -enable-nvdec -enable-nvenc -enable-nonfree -enable-libnpp -extra-cflags=-I/usr/local/cuda/include -extra-ldflags=-L/usr/local/cuda/lib64 Configure FFmpeg using the following command (use correct CUDA library path):.

#Ffmpeg resize frame install#

#Ffmpeg resize frame driver#

Separate from the CUDA cores, NVENC/NVDEC run encoding or decoding workloads without slowing the execution of graphics or CUDA workloads running at the same time. NVIDIA GPUs ship with an on-chip hardware encoder and decoder unit often referred to as NVENC and NVDEC. Let’s take a look at how NVIDIA GPUs incorporate dedicated video processing hardware and how you can take advantage of it. The massive video content generated on all fronts requires robust hardware acceleration of video encoding, decoding, and transcoding. Video providers want to reduce the cost of delivering more content with great quality to more screens. The ideal solution for transcoding needs to be cost effective in terms of cost (Dollar/stream) and power efficiency (Watts/stream) along with delivering high quality content with maximum throughput for the datacenter.

This makes video transcoding a critical piece of an efficient video pipeline – whether it is 1:N or M:N profiles. Content in production may arrive in one of the large numbers of codec formats that needs to be transcoded into another for distribution or archiving. Video content distributed to viewers is often transcoded into several adaptive bit rate (ABR) profiles for delivery. Live streaming will drive overall video data traffic growth for both cellular and Wi-Fi as consumers move beyond watching on-demand video to viewing live streams. All social media applications now include the feature on their respective platforms. Consumer behavior has evolved, evident in the trends of OTT video subscription and the rapid uptake of live streaming. The processing demands from high quality video applications have pushed limits for broadcast and telecommunication networks. As of July 2019 Kepler, Maxwell, Pascal, Volta and Turing generation GPUs support hardware encoding, and Fermi, Kepler, Maxwell, Pascal, Volta and Turing generation GPUs support hardware decoding. All NVIDIA GPUs starting with the Kepler generation support fully-accelerated hardware video encoding, and all GPUs starting with Fermi generation support fully-accelerated hardware video decoding.

0 kommentar(er)

0 kommentar(er)